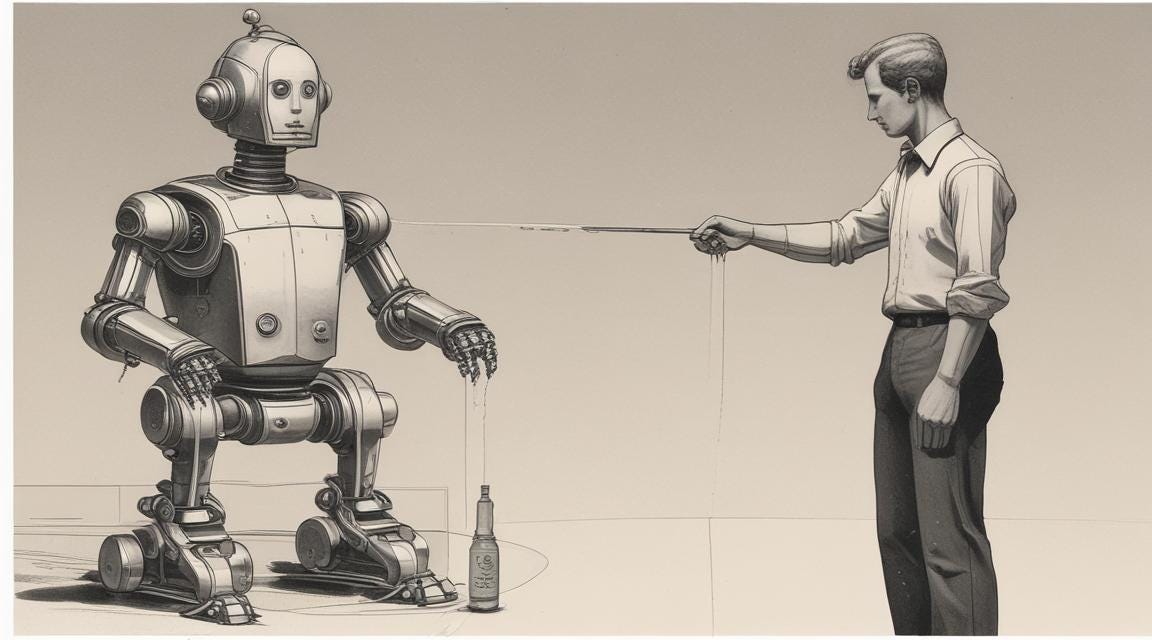

How Should We “Discipline” AI?

Easy to Discipline but Not Easy to Discipline Well

In the past one year, there are many organizations publishing AI Governance Framework especially governance framework on Generative AI. In this issue, I want to discuss more about this phenomenon and also how I assess the usefulness of these framework.

Now if you look at any governance framework, it is about setting boundaries with hard boundaries being the laws, and soft boundaries being guidelines.

Let’s use an analogy to understand this better. Most parents will have gone through the phase of disciplining the child. Now disciplining the child is easy, setting boundaries and punishing the children when these boundaries are crossed. However, any parents will know that setting these boundaries is relatively easier, but setting boundaries and allowing the child to flourish is very challenging. This requires a feedback mechanism, which can mean observing and understanding how the child behave, after setting the boundaries. In the world of child discipline, the feedback can come back in very slowly and thus sometimes we see in movies and drama, the child was traumatised, scarred for the rest of his/her life (Hei, we are talking about movies and drama, of course it is dramatised but that is not saying it cannot happen right?).

Assessment of Framework

Relating back to the analogy, any organizations can come up with an AI governance framework, but setting up a framework that does not stunt innovation and abuse of AI will be challenging. Besides the set up of framework, what I will hope to see is the organization set up a feedback mechanism that allows the users of framework to feedback on what is restricting and what should be restricted.

Followed by, there should be a library of use cases available publicly. The use cases can be successful ones but more importantly failed use cases. These library of use cases can add credibility to the framework, that an outsider looking at it will know that the use cases are used to guide the setting up of the framework.

And as time goes by, the framework has an increasing list of endorsement from users, that they have benefitted from it.

With these three factors, (1) feedback mechanism, (2) library of use cases and (3) an increasing list of endorsement will I say that the governance framework has credibility and value to increase the adoption AI while managing the abuse and risk of using AI.

It will be interesting to see which framework comes up on top as time goes by. :)

Your thoughts? Please share them in the comments or PM me on my LinkedIn.

Like my content? Consider sharing it, please! :)

I wrote a thought experiment on how I will set up a AI Governance Committee, i.e. what are the areas of knowledge and expertise I want to have. Have a read here and again I invite your perspectives and sharing. <Blog Post>

I like the suggestions of a library of use cases, especially the failed ones. To document WHY we failed is so important to the learning; whether the failure if attributed to poor design, flawed assumptions or even just poor timing (i.e. market not ready).